GAN vs Style Transfer Image Acceptance

Computer Vision (6.869) Class Project: Spring 2021

Introduction

There are currently many different techniques to be able to generate unique images - including style transfer and generative networks. In this project we decided to use style transfer and Generative Adversarial Networks (GAN) to create two different categories of images: one from an indoor scene and one from an outdoor scene. For our indoor scene we used images of bedrooms and for the outdoor scene we used pictures of mountains (both pulled from the Places365 dataset). To measure the quality of the images generated, we used a metric called the Inception Score (IS) that calculates a score for our models based off of the image diversity and quality. We then took some of our generated images and real images from the dataset and sent out a survey to human participants and asked them to assign a score to the images based off of how natural they felt the image looked like and their class prediction for each iamge. The main goal here was to not only test how style transfer compared to a GAN, but also test if our current automated metrics can predict human acceptance.

Summary

This project can be broken up into 4 main steps:

- Training the style transfer models and generating images using style transfer

- Training the GAN models and generating completely artificial images

- Calculating the Inception Score for each of the models above

- Sending a sample of the dataset images and the generated images to human subjects and getting their scores

Step 1: Style Transfer

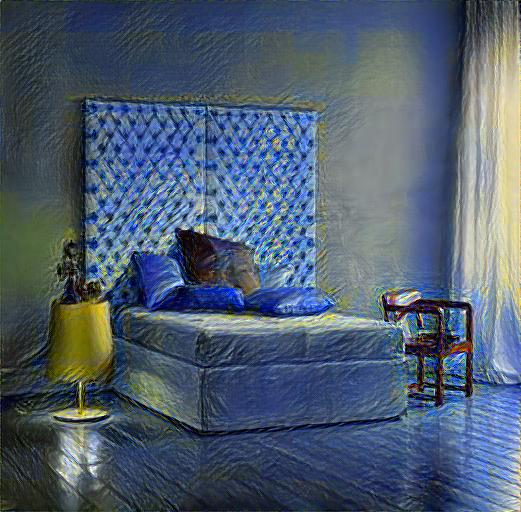

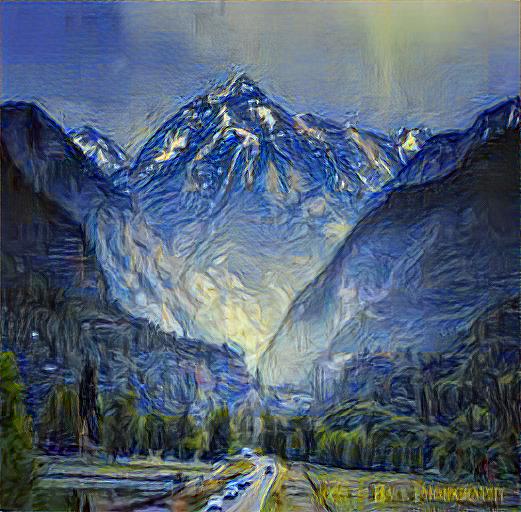

Style transfer is the idea that every image has a certain "style", typically found

in the painting styles of different artists (ex. the style of Starry Night by Van Gogh). In style transfer there are two images - an image

with the target content and an image with the target style. With style transfer we essentially redraw the content image with the other image's style.

For this, we trained a model based off of VGG-19 to perform our style transfer. Below is a sample of some of the style transfer generated images that were used in the human survey, alongside their

original counterparts.

Step 2: GAN for Image Generation

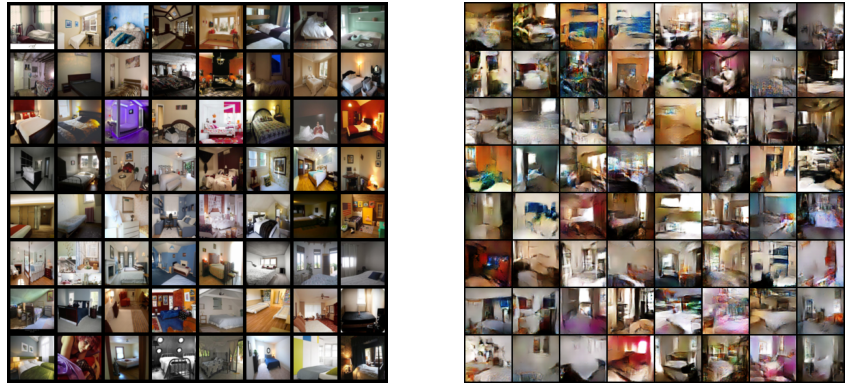

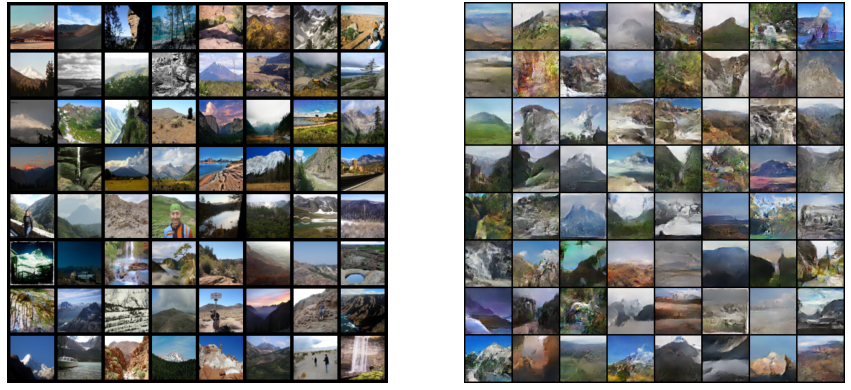

The second type of computer generated image we wanted to test is to have a model that essentially

"draws" an entire new image. For this we used a Deep Convolutional Generative Adversarial Network (DCGAN),

a type of GAN used specifically for image generation and is composed of many convolutional layers.

GANs work by effectively having two networks that essentially "compete" in a zero sum game. These two networks

are the generator and the discriminator. This discriminator's job is to tell the difference between the generated images

and the training images, while the generator learns by seeing what images it creates can "trick" the

discriminator into thinking it's a real image.

We had two DCGANs, one for the bedroom class and one for the mountain class. Below is a sample of the training images (left) and the images that the DCGANs

generated (right) for each class.

Step 3: Inception Scores

A way that is often used to measure how realistic a set of generated images is is by using Inception Score. The Inception Score is meant to measure two things:

if the images have variety and if the images each have a distinct class.

For our inception Score calculation, we will be using the Inception v3 model described by

Szedgedy et al. The pretrained inception v3 model

included with pytorch is trained on ImageNet classes, so

Inception Scores calculated from this model would have a

range [1,1000] since the model supports 1,000 classes. However, since we only used two classes (mountains and bedrooms), we retrained the Inception v3 model to

be able to calculate the score with that class range, making the score range now [1,2].

A higher score indicates that the generated set of images has

a diverse set of images that distinctly look like the different classes.

We created two initial sets of images to calculate preliminary Inception Scores for each for a different part our of project. The sets are:

- All 128 Generated GAN images (64 per class), giving us an Inception Score of 1.3716205

- All 30 style transfer images (15 per class), giving us an Inception Score of 1.3192803

Step 4: Human Survey

For our human survey, we created three sets of images in four categories, for a total of twelve sets of images. The sets are as follows:

- Only style transfer images

- high class prediction probability (>0.95), Inception Score of 1.7898717

- medium class prediction probability (~0.8), Inception Score of 1.7898717

- near equal prediction probaility (~0.5), Inception Score of 1.7898717

- Only GAN images

- high class prediction probability (>0.95), Inception Score of 1.8755617

- medium class prediction probability (~0.8), Inception Score of 1.33302

- near equal prediction probaility (~0.5), Inception Score of 1.0380573

- Style transfer bedroom and GAN mountains

- high class prediction probability (>0.95), Inception Score of 1.8774519

- medium class prediction probability (~0.8), Inception Score of 1.3349577

- near equal prediction probaility (~0.5), Inception Score of 1.0455266

- GAN bedrooms and style transfer mountains

- high class prediction probability (>0.95), Inception Score of 1.7880352

- medium class prediction probability (~0.8), Inception Score of 1.7880352

- near equal prediction probaility (~0.5), Inception Score of 1.7880352

For each individual image, we asked:

- How natural does the image look? On a scale of 1-10, 1 being “very unnatural” and 10 being “very natural”

- How much does the image look like a bedroom or a mountain? On a scale of 1-10, 1 being “bedroom” and 10 being “mountain”

Results Overview

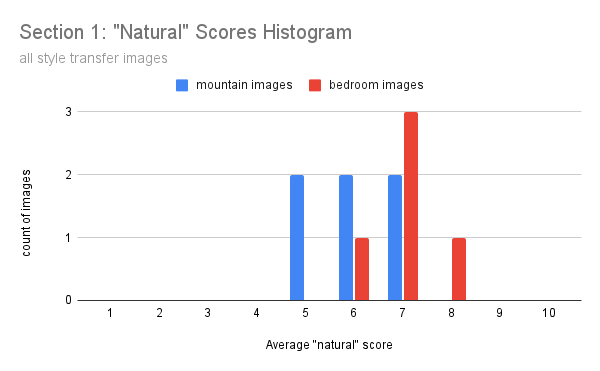

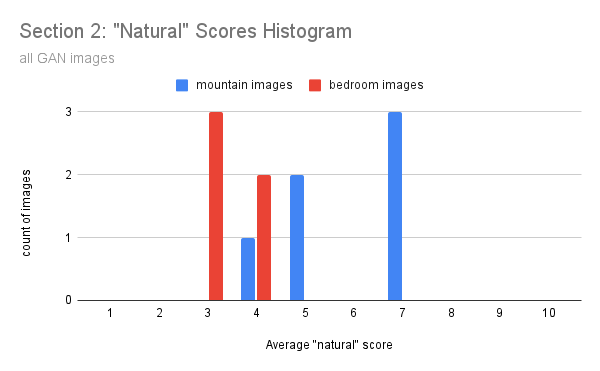

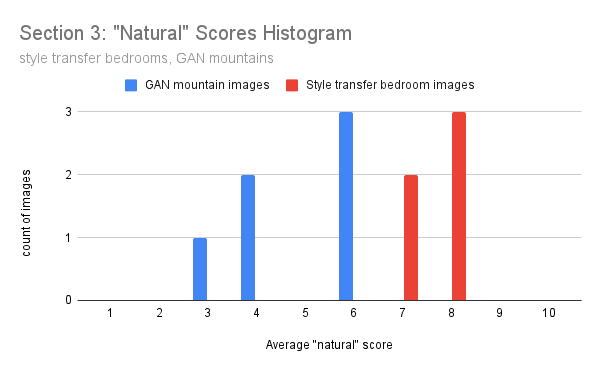

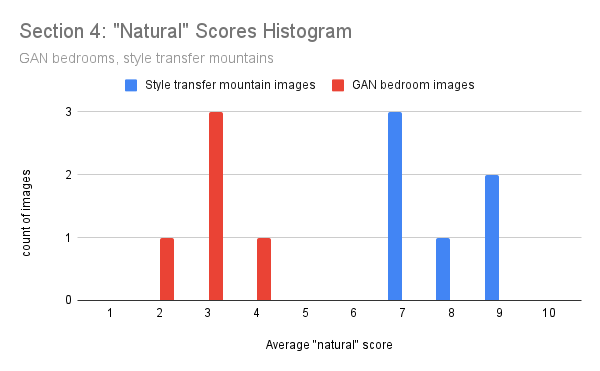

After sending out the survey and collecting responses from over 200 participants, we were able to generate the following figures comparing human scores and Inception Scores

for each of the groupings of images described above. For the histograms, all of the bins are left closed. More detailed analysis and

results are also included in the final write up at the end of this page.

.png)

.png)

.png)

.png)

From the graphs above, we can come to three conclusions:

- Style transfer images are on average considered more natural than GAN generated images

- Humans find that GAN mountains are more natural than GAN bedrooms, but the opposite is true for style transfer images

- The human probability assigned to an image is a good indicator of the naturalness rating when comparing style transfer to GAN, but not for only GAN images

Final Paper and Full Project Report

This work was completed in Spring 2021 for the final project in 6.869 Advances in Computer Vision (a graduate level course at MIT).

The full paper with results is included below. It can also be viewed and downloaded here.