MIT Media Lab: Project Us

Undergraduate Research Work from Fall 2020-Spring 2021

Introduction

Project Us is a project that aims to promote empathy by using different modalities (voice and vision) to predict and share how a user and their conversation partner feel about the interaction. This project has gone through several different iterations, the most recent information from the Media Lab can be found here, and the current project homepage can be found here.

On this page I will be sharing the state of the project when I graduated from MIT undergrad in June 2021 (and I was no longer involved). Project Us is/was a part of the Fluid Interfaces Research Group at the Media Lab, led by Prof. Pattie Maes. This project was and still is led by Dr. Camilo Rojas from the Fluid Interfaces group. During my time on this project I was involved in many different aspects, including web development, production pipeline development, user interface design, model development (including training, testing, and deploying), and helping to manage and support other Undergraduate team members in their work. This project was one out of four projects chosen to be showcased at the Fall 2020 Media Lab Member's Week.

Project State in June 2021 and Walkthrough

Homepage

In June 2021, Project Us worked by having two users on a call using another platform. The users then navigated to the Project Us homepage, shown below:

Tutorial

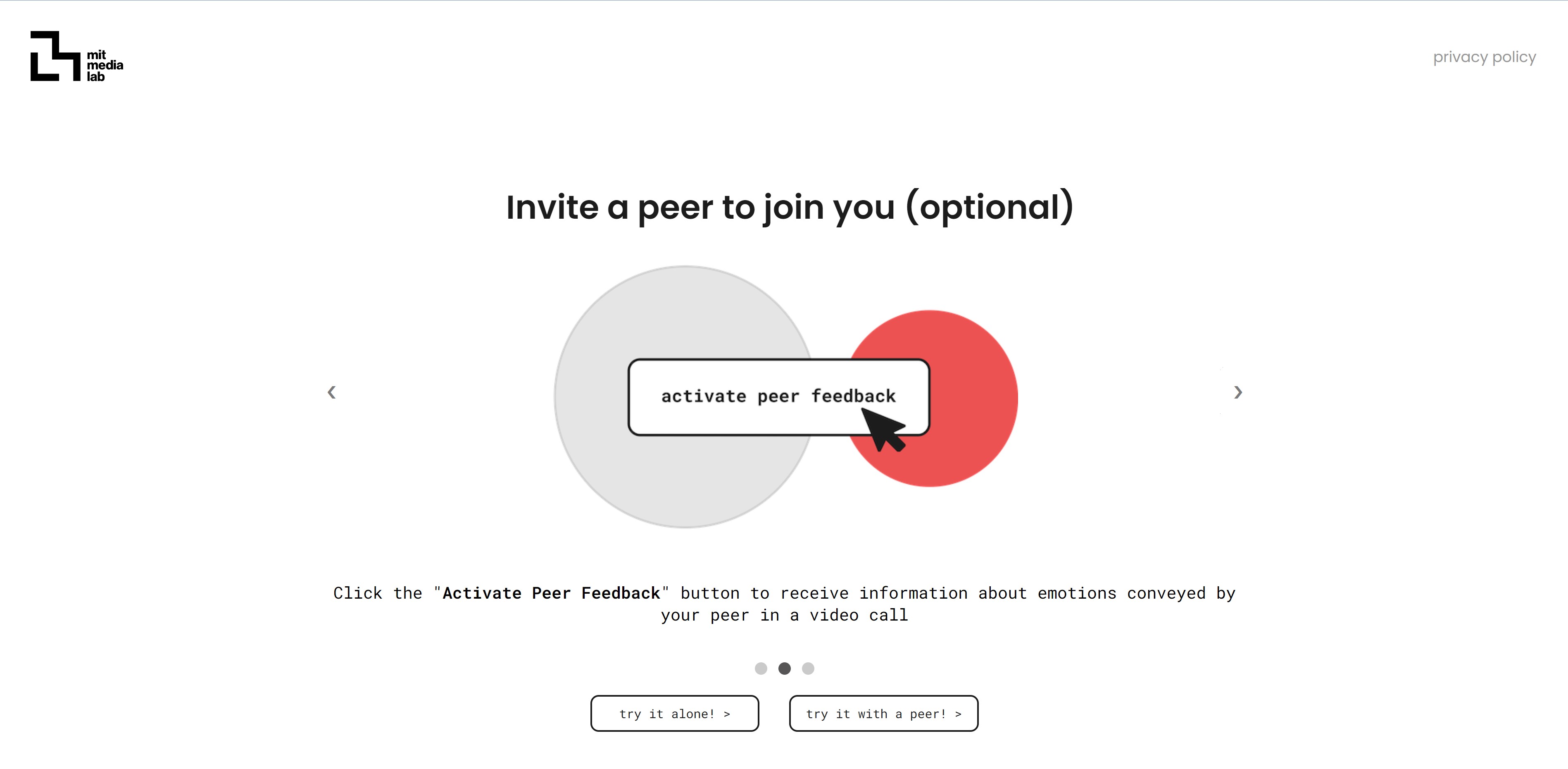

From there, the users would then go through the tutorial and walkthrough and have the option to either try it alone or with a conversation partner.

Main Page

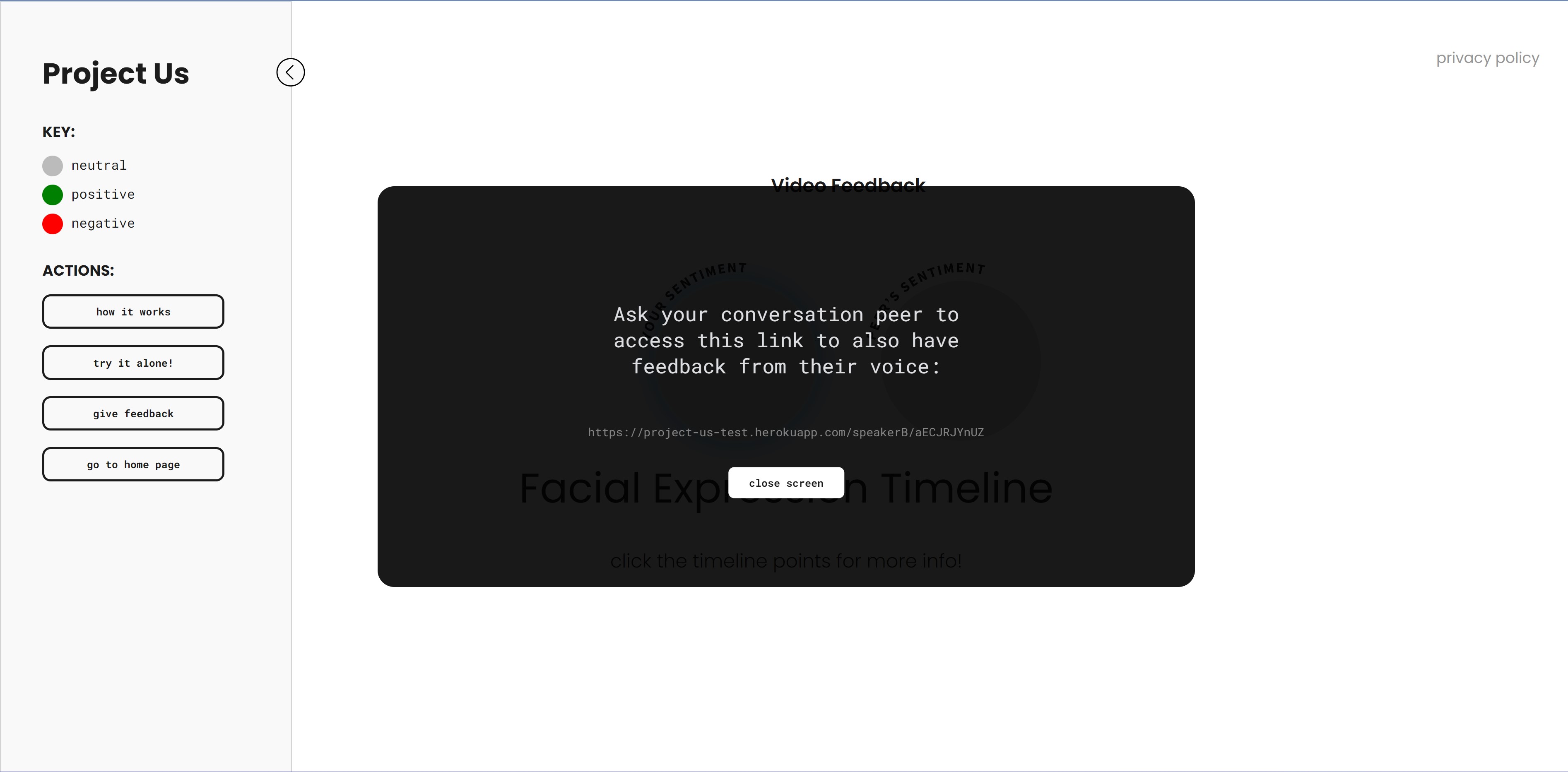

Users engaging in a conversation with their partner would then be greeted with this initial screen, where they can press on the circle and get a prompt to share a link with their conversation partner to get realtime insights into each other's emotions.

Start screen before sharing the link with the partner

Screen prompting the user to share the link with their conversation partner

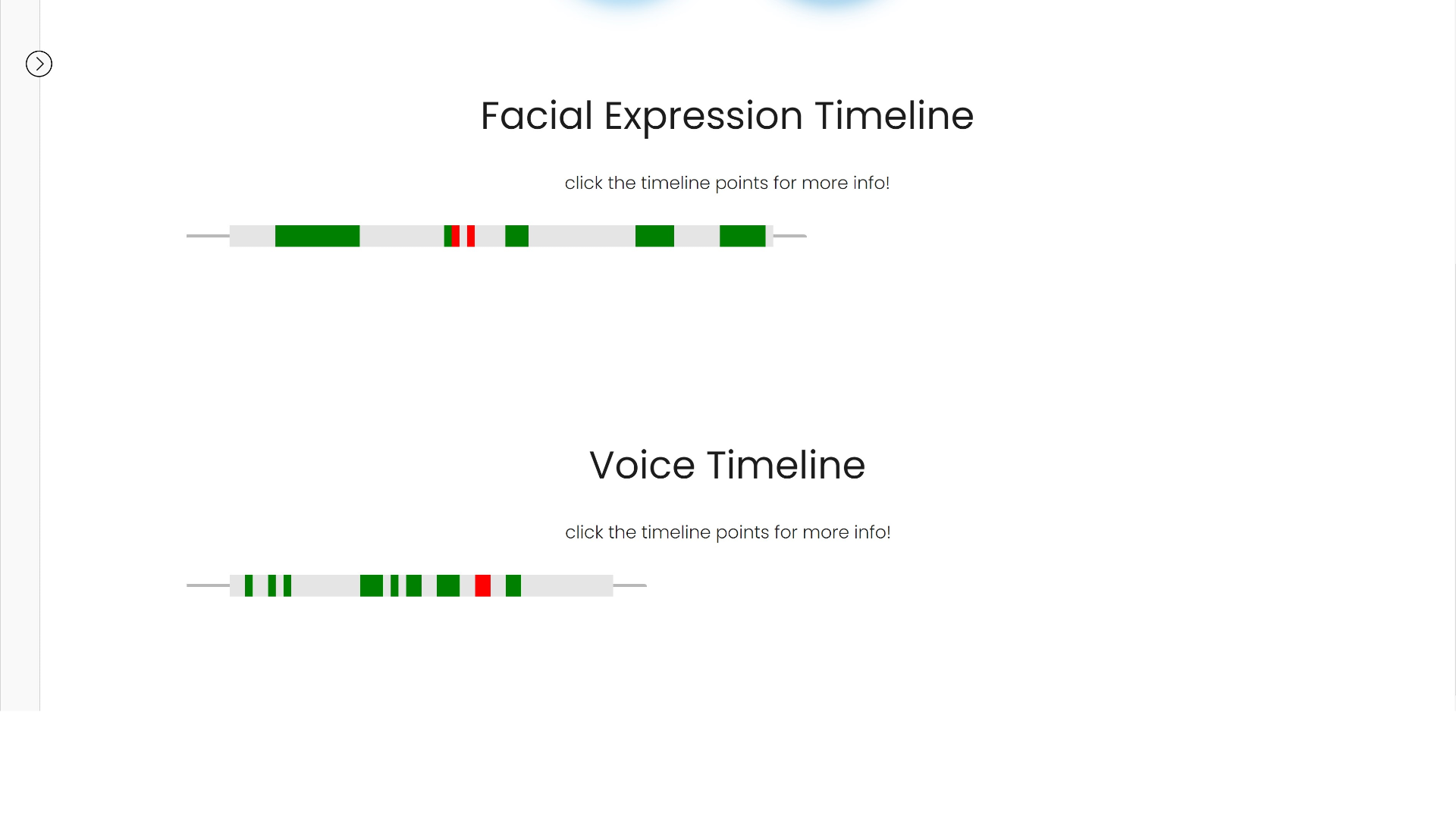

Once the user has shared the link with their conversation partner, they can start to see their partner's predicted sentiment analysis based on their voice and facial expression. Below in the facial expression timeline the user can also see a timeline of their facial expressions, and can click on data points to see the time and the picture that resulted in the prediction.

If the user scrolls down more, they can also see the voice timeline and those predictions, and clicking on the timeline points will show the predicted text and the audio clip.

These two timelines are somewhat hidden from the view so that the user can use them more as a reflection tool after the conversation or during natural breaks, and to not get in the way of the conversation partner's predictions. This is also to encourage the users to interact more naturally when using the tool, and to help them focus on how their project partner is feeling. The most recent information from the Media Lab can be found here, and the current project homepage can be found here.